What Are Neural Network

A Beginner's Guide to Neural Networks and Deep LearningContents.Neural networks are a set of algorithms, modeled loosely after the human brain, that are designed to recognize patterns. They interpret sensory data through a kind of machine perception, labeling or clustering raw input. The patterns they recognize are numerical, contained in vectors, into which all real-world data, be it images, sound, text or time series, must be translated.Neural networks help us cluster and classify. You can think of them as a clustering and classification layer on top of the data you store and manage. They help to group unlabeled data according to similarities among the example inputs, and they classify data when they have a. (Neural networks can also extract features that are fed to other algorithms for clustering and classification; so you can think of deep neural networks as components of larger machine-learning applications involving algorithms for, classification and.)What kind of problems does deep learning solve, and more importantly, can it solve yours? To know the answer, you need to ask questions:.What outcomes do I care about?

What Are Neural Network Models

Those outcomes are labels that could be applied to data: for example, spam or notspam in an email filter, goodguy or badguy in fraud detection, angrycustomer or happycustomer in customer relationship management.Do I have the data to accompany those labels? That is, can I find labeled data, or can I create a labeled dataset (with a service like AWS Mechanical Turk or Figure Eight or Mighty.ai) where spam has been labeled as spam, in order to teach an algorithm the correlation between labels and inputs?Deep learning maps inputs to outputs.

It finds correlations. It is known as a “universal approximator”, because it can learn to approximate an unknown function f(x) = y between any input x and any output y, assuming they are related at all (by correlation or causation, for example). In the process of learning, a neural network finds the right f, or the correct manner of transforming x into y, whether that be f(x) = 3x + 12 or f(x) = 9x - 0.1. Here are a few examples of what deep learning can do. ClassificationAll classification tasks depend upon labeled datasets; that is, humans must transfer their knowledge to the dataset in order for a neural network to learn the correlation between labels and data.

This is known as. Detect faces, identify people in images, recognize facial expressions (angry, joyful). Identify objects in images (stop signs, pedestrians, lane markers). Recognize gestures in video.

Detect voices, identify speakers, transcribe speech to text, recognize sentiment in voices. Classify text as spam (in emails), or fraudulent (in insurance claims); recognize sentiment in text (customer feedback)Any labels that humans can generate, any outcomes that you care about and which correlate to data, can be used to train a neural network. ClusteringClustering or grouping is the detection of similarities. Deep learning does not require labels to detect similarities. Learning without labels is called.

Unlabeled data is the majority of data in the world. One law of machine learning is: the more data an algorithm can train on, the more accurate it will be.

Therefore, unsupervised learning has the potential to produce highly accurate models. Search: Comparing documents, images or sounds to surface similar items. Anomaly detection: The flipside of detecting similarities is detecting anomalies, or unusual behavior. In many cases, unusual behavior correlates highly with things you want to detect and prevent, such as fraud.Predictive Analytics: RegressionsWith classification, deep learning is able to establish correlations between, say, pixels in an image and the name of a person.

You might call this a static prediction. By the same token, exposed to enough of the right data, deep learning is able to establish correlations between present events and future events. It can run regression between the past and the future. The future event is like the label in a sense. Deep learning doesn’t necessarily care about time, or the fact that something hasn’t happened yet.

Given a time series, deep learning may read a string of number and predict the number most likely to occur next. Hardware breakdowns (data centers, manufacturing, transport). Health breakdowns (strokes, heart attacks based on vital stats and data from wearables). Customer churn (predicting the likelihood that a customer will leave, based on web activity and metadata). Employee turnover (ditto, but for employees)The better we can predict, the better we can prevent and pre-empt. As you can see, with neural networks, we’re moving towards a world of fewer surprises.

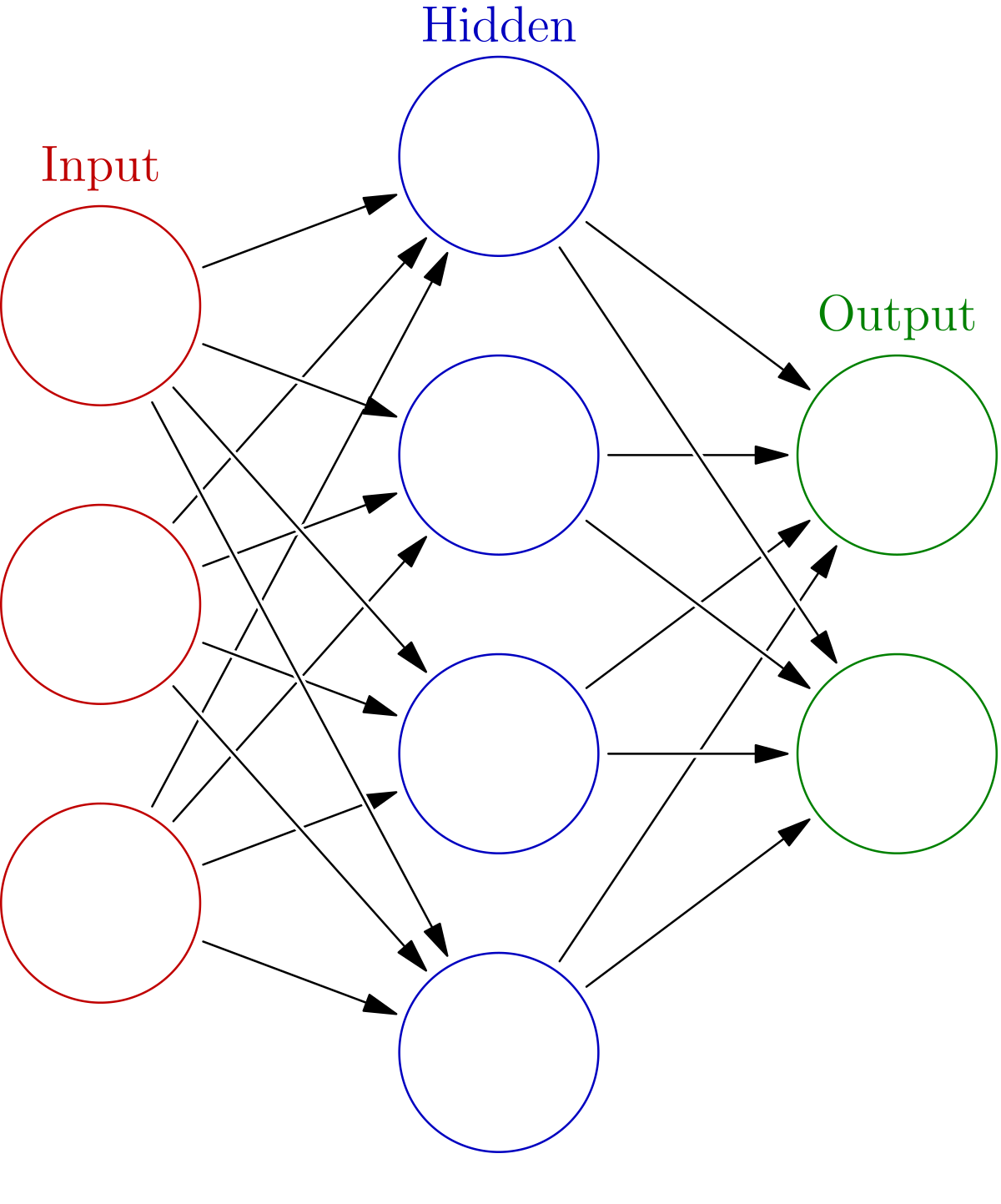

Not zero surprises, just marginally fewer. We’re also moving toward a world of smarter agents that combine neural networks with other algorithms like to attain goals.With that brief overview of, let’s look at what neural nets are made of.Deep learning is the name we use for “stacked neural networks”; that is, networks composed of several layers. Are you using Machine Learning for enterprise applications? The Skymind Platform can help you ship faster. Or.The layers are made of nodes. A node is just a place where computation happens, loosely patterned on a neuron in the human brain, which fires when it encounters sufficient stimuli. A node combines input from the data with a set of coefficients, or weights, that either amplify or dampen that input, thereby assigning significance to inputs with regard to the task the algorithm is trying to learn; e.g.

Which input is most helpful is classifying data without error? These input-weight products are summed and then the sum is passed through a node’s so-called activation function, to determine whether and to what extent that signal should progress further through the network to affect the ultimate outcome, say, an act of classification. If the signals passes through, the neuron has been “activated.”Here’s a diagram of what one node might look like.A node layer is a row of those neuron-like switches that turn on or off as the input is fed through the net. Error.

weight's contribution to error = adjustmentThe three pseudo-mathematical formulas above account for the three key functions of neural networks: scoring input, calculating loss and applying an update to the model – to begin the three-step process over again. A neural network is a corrective feedback loop, rewarding weights that support its correct guesses, and punishing weights that lead it to err.Let’s linger on the first step above.Despite their biologically inspired name, artificial neural networks are nothing more than math and code, like any other machine-learning algorithm.

In fact, anyone who understands linear regression, one of first methods you learn in statistics, can understand how a neural net works. In its simplest form, linear regression is expressed as. Yhat = bX + awhere Yhat is the estimated output, X is the input, b is the slope and a is the intercept of a line on the vertical axis of a two-dimensional graph. (To make this more concrete: X could be radiation exposure and Y could be the cancer risk; X could be daily pushups and Yhat could be the total weight you can benchpress; X the amount of fertilizer and Yhat the size of the crop.) You can imagine that every time you add a unit to X, the dependent variable Yhat increases proportionally, no matter how far along you are on the X axis. That simple relation between two variables moving up or down together is a starting point.The next step is to imagine multiple linear regression, where you have many input variables producing an output variable.

It’s typically expressed like this. Yhat = b1.X1 + b2.X2 + b3.X3 + a(To extend the crop example above, you might add the amount of sunlight and rainfall in a growing season to the fertilizer variable, with all three affecting Yhat.)Now, that form of multiple linear regression is happening at every node of a neural network. For each node of a single layer, input from each node of the previous layer is recombined with input from every other node.

That is, the inputs are mixed in different proportions, according to their coefficients, which are different leading into each node of the subsequent layer. In this way, a net tests which combination of input is significant as it tries to reduce error.Once you sum your node inputs to arrive at Yhat, it’s passed through a non-linear function. Here’s why: If every node merely performed multiple linear regression, Yhat would increase linearly and without limit as the X’s increase, but that doesn’t suit our purposes.What we are trying to build at each node is a switch (like a neuron) that turns on and off, depending on whether or not it should let the signal of the input pass through to affect the ultimate decisions of the network.When you have a switch, you have a classification problem. Does the input’s signal indicate the node should classify it as enough, or notenough, on or off? A binary decision can be expressed by 1 and 0, and is a non-linear function that squashes input to translate it to a space between 0 and 1.The nonlinear transforms at each node are usually s-shaped functions similar to logistic regression. They go by the names of sigmoid (the Greek word for “S”), tanh, hard tanh, etc., and they shaping the output of each node.

The output of all nodes, each squashed into an s-shaped space between 0 and 1, is then passed as input to the next layer in a feed forward neural network, and so on until the signal reaches the final layer of the net, where decisions are made. Gradient DescentThe name for one commonly used optimization function that adjusts weights according to the error they caused is called “gradient descent.”Gradient is another word for slope, and slope, in its typical form on an x-y graph, represents how two variables relate to each other: rise over run, the change in money over the change in time, etc. In this particular case, the slope we care about describes the relationship between the network’s error and a single weight; i.e. That is, how does the error vary as the weight is adjusted.To put a finer point on it, which weight will produce the least error? Which one correctly represents the signals contained in the input data, and translates them to a correct classification?

Which one can hear “nose” in an input image, and know that should be labeled as a face and not a frying pan?As a neural network learns, it slowly adjusts many weights so that they can map signal to meaning correctly.

If you want to break into cutting-edge AI, this course will help you do so. Deep learning engineers are highly sought after, and mastering deep learning will give you numerous new career opportunities. Deep learning is also a new 'superpower' that will let you build AI systems that just weren't possible a few years ago.In this course, you will learn the foundations of deep learning. When you finish this class, you will:- Understand the major technology trends driving Deep Learning- Be able to build, train and apply fully connected deep neural networks- Know how to implement efficient (vectorized) neural networks- Understand the key parameters in a neural network's architectureThis course also teaches you how Deep Learning actually works, rather than presenting only a cursory or surface-level description. So after completing it, you will be able to apply deep learning to a your own applications. If you are looking for a job in AI, after this course you will also be able to answer basic interview questions.This is the first course of the Deep Learning Specialization.